The internet like many modern technologies, is in equal parts loved and hated for many reasons. However, there is no refuting that it is a phenomenal source of information ( Unfortunately a lot of misinformation too ) and an invaluable resource. Even if there are days where I want to escape into the woods and return back to live a Paleolithic life in the Forrest, I cannot deny that I would miss being able to Google all my random thoughts and questions at 4AM anymore.

The beauty of the internet for me, is that I can turn a curious thought into a creative impulse and end up building something I can use over a weekend. This weekend I delved into the weird and wonderful world of AI. Artificial intelligence is something I haven’t really got a chance to work with yet but have a rapidly growing interest in, I think even more so recently after looking into adversarial machine learning. Thanks to these awesome articles, I managed to get my hands dirty and setup an OpenAI GPT-2 text-generation bot after around 18 hours of training with a custom list on my laptop.

https://blog.floydhub.com/gpt2 – Information on what GPT-2 is and what it does

https://github.com/minimaxir/gpt-2-simple – ReadMe with usage instructions on how to use the python module

https://minimaxir.com/2020/01/twitter-gpt2-bot/ – The initial blog I read around GPT-2 and it’s applications.

https://lambdalabs.com/blog/run-openais-new-gpt-2-text-generator-code-with-your-gpu/ – Environment Setup instructions

The idea came into fruition when I was brainstorming different company names for a new business venture when my friend, 0x736A, frivolously proposed the idea of getting Artificial Intelligence to do it for me and mentioned GPT-2. Unbeknownst to them, I took the suggestion seriously. It was a fantastic way to procrastinate against actually coming up with a name myself and I was elated to finally get the chance to play about with AI. I had also previously seen some of Janelle Shane‘s work with GPT-2 and if you haven’t read her book, then I strongly suggest adding it to your reading list

So I got straight to work, Researching GPT-2 and other language models. A whole new world of shiny technologies, of which only some I had even heard of. After reading through a horde of blog posts, documentation and other articles, I was more confused than before I had started, which is frequently the case when I am venturing into uncharted waters. I decided I was going to get my Lab environment installed and understand more as I played about with it.

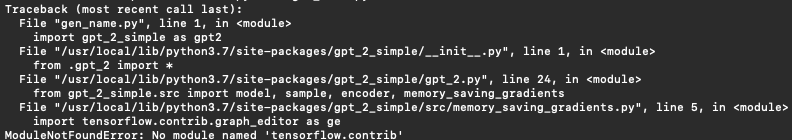

The lab setup was pretty simple, I already had python3 installed so just needed to install a few packages using pip3 and resolve a few dependency issues. I also ran into an issue with the version of tensorflow i was using as GPT-2 does not support tensorflow 2.0:

If you install Tensorflow 2.0 it will throw an error stating that there is No module named ‘tensorflow.contrib’. So in order to get around this I just removed the package and installed the latest 1.X version. You also need to install gpt_2_simple along with all of it’s dependencies.

Once everything was setup, I knew I had to do two things, Download the base model for which I used 345M ( 345 million trainable parameters ) and then fine tune that with custom training material. So while I downloaded the model which sits around 1.5GB in size, I began compiling the training data. I needed to collate a large list of company names so I started with the obvious and added every fortune 500 company to the list, the rest consisted of the following:

- INC5000 List

- NASDAQ Listed Companies

In total I had 208Mb of different company names that I could feed into my newly downloaded model. I proceeded to use this list to train the bot over 2000 steps, which in total took around 10 or so hours which varies depending on the hardware you are running. It may also be worth noting I was using the CPU version of tensorflow but you can install the GPU version if you have a dedicated graphics card. I wrapped each company name with a prefix and a suffix, so that it was clear where the start and end of each company name was using a quick while loop to iterate over the list. For example:

while IFS= read -r line; do echo "<|startoftext|>$line<|endoftext|>" >> company_names_final.gpt ; done < ./company_names.txt

My training material was now complete and ready to go, the base model had been downloaded and I could finally start fine tuning my bot by executing the following code:

import gpt_2_simple as gpt2

from datetime import datetime

gpt2.load_gpt2(sess, run_name='run1')

file_name = "company_names_final.gpt"

gpt2.finetune(sess,

dataset=file_name,

model_name='345M',

steps=2000,

restore_from='fresh',

run_name='run1',

print_every=10,

sample_every=500,

save_every=500

)

Once the fine tuning had completed, It was time for the good part. Generating text and seeing what my new companion could concoct. The results… did not disappoint. To generate text from the bot you can just use gpt2.generate however I wanted to save the output to a file so specified a target file and generated 200 samples in batches of 20.

import gpt_2_simple as gpt2

from datetime import datetime

sess = gpt2.start_tf_sess()

gpt2.load_gpt2(sess, run_name='run1')

gen_file = 'company_names_{:%Y%m%d_%H%M%S}.txt'.format(datetime.utcnow())

gpt2.generate_to_file(sess,

destination_path=gen_file,

length=200,

temperature=0.7,

prefix='<|startoftext|>',

truncate='<|endoftext|>',

include_prefix=False,

nsamples=200,

batch_size=20

)

It is important to load the session and the run that was created during fine tuning ( see run_name in previous script ), so that you can generate against the tuned model. A further step could be to segregate runs specific to a certain target. For instance, using the company name generator as an example, tuning with a specific industry, sector or role in mind. You could train it with only consultancy company names or restaurants, then generate text from a run that was trained in the specific area you want a company name for.

For the company name checker, I wanted to write a wrapper script that generates a few samples and queries companies house ( UK Governments Company Registrar ) to make sure that I display only the names that are available. For this I lowered the nsamples ( Amount of generated objects ) to 20 and then used curl to query companies house after reading the gen_file line by line and injecting the generated company name.

import gpt_2_simple as gpt2

import subprocess as sp

import re

from datetime import datetime

sess = gpt2.start_tf_sess()

gpt2.load_gpt2(sess, run_name='run1')

gen_file = './outputs/company_names_{:%Y%m%d_%H%M%S}.txt'.format(datetime.utcnow())

gpt2.generate_to_file(sess,

destination_path=gen_file,

length=200,

temperature=0.7,

prefix='<|startoftext|>',

truncate='<|endoftext|>',

include_prefix=False,

nsamples=20,

batch_size=20

)

with open(gen_file) as gf:

line = gf.readline()

count = 1

while line:

line = gf.readline()

line_nn = line.rstrip('\n')

bad_line = '===================='

if line_nn == bad_line:

count += 1

continue

company_name_url_format = line_nn.replace(" ", '+')

command = "`which curl` -G --silent --data \"q=" + company_name_url_format + "\" 'https://beta.companieshouse.gov.uk/company-name-availability' | grep 'No exact'"

output = sp.getoutput(command)

if output != "":

output_company = re.sub('No exact company name matches found for ', '', output)

print(output_company + " is available")

count += 1

else:

count += 1

gf.close

This then outputs a few examples of ready to use company names:

"ELIZABETH CHILD CARE LIMITED" is available "SANDRA VENTURES LIMITED" is available "B RICHARDS COOKE LIMITED" is available "MOSCART LIMITED" is available "CHIP JANUIS LIMITED" is available "BUNKHAM BLUES LIMITED" is available "ANALYTIC TRACK LIMITED" is available "BLACKSTAR MUSIC LIMITED" is available "CRAIG EYEL LIMITED" is available "SOUTHERN BRICKWORK LIMITED" is available "KUTO LIMITED" is available "AFIQUIN LIMITED" is available "LONDON SENSE LIMITED" is available "NOON PROPERTY INVESTMENTS LIMITED" is available

Here are some of the best/worst names that cropped up.

BLACKSTAR MUSIC LIMITED GAPMARK LTD SONIC EVER LIVE LLP CHURCH OF LIVING DUBDUBZ LIMITED I HARRISON YOU THANGE WHALLUK SERVICE JUSTA DELIVERY LTD USB DESIGN HQ LTD LYMPICAL SCIENTOLOGY LIMITED FAMILY LOSS LIMITED STICKJOB LIMITED VSC INVESTMENTS LIMITED GUN TECHNICIANS LIMITED VALLEY BAKERY LIMITED PLUS HULK LTD

Finally the holiest of grails, la crème de la crème, la pièce de résistance…

DRAGMA NUTS LIMITED

Check out the Github repo if you want to take a look at the code or generate your own company names here.